If the above means essentially the same as below then I disagree.

{If the BMS is set to cut off at the charger's CV (absorb voltage), no CV charging will happen.}

Absorb phase is not when the charger voltage and battery voltage are equal.

Absorb phase is when the cell can't draw the configured current, because the voltage differential is not high enough.

In order for any flow to happen there has to be a voltage differential.

This may be a difference in how we are defining the terms.

The most common used terms for the 3 mode charging is BULK ABSORB and FLOAT

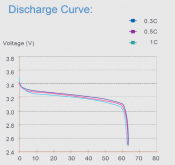

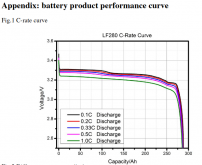

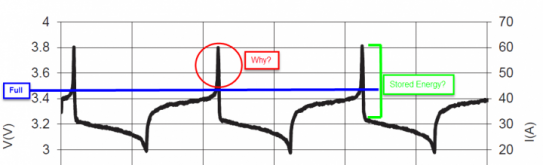

BULK charge in my mind is the constant current mode where the charger will keep the current at a set level as the battery voltage is climbing. This puts the BULK of the energy into the battery. BULK mode ends when the battery voltage hits the desired maximum charge voltage. If you are charging at a very high current, and then terminate the BULK charge to no current at all, the battery voltage is going to fall back a fair amount. How far will depend on all of the resistances in the system, including internal cell resistance. This has been described as a rubber band effect. The greater the charge current, the further the rubber band will stretch.

ABSORB charge is normally the time directly after the bulk charge. During ABSORB, the charger will hold the battery terminal voltage constant at the desired maximum voltage. As the actual internal cell voltage climbs closer to the terminal voltage, the current will naturally fall off as the difference between the internal chemical voltage and the terminals become closer so there is less voltage across the internal resistance. The cell is ABSORBING more power and the voltage is stabilizing with the charger constant voltage.

FLOAT charge is not normally used on most Lithium chemistries. If there is a light constant load or the cells have some internal leakage, then it can be beneficial though. FLOAT charge is similar to the ABSORB in that it holds a constant voltage, but it is at a lower voltage so it will just keep the battery from losing charge over extended time. If the battery is just cycling every day, this is certainly not needed. Float is normally used for backup only power system where you want to keep the battery full for a long time.

What you are describing with

"Absorb phase is when the cell can't draw the configured current, because the voltage differential is not high enough."

is the termination or end of the absorption phase.

On cells with very low internal resistance at a lower charge current, the absorb phase could be very short.

As for the original discussion... Let's assume the battery bank is a 4S 12 volt LFP system. To try to get the battery to just 80% charge. I am using guestimate numbers for voltage to SoC, feel free to look up more accurate numbers but I am using a large imbalance to show a point.

Using the BMS to do the cutoff, the BMS is set to terminate charge when any single cell hits just 3.5 volts. That is a total pack voltage of 14 volts. Even if you have a balancer that can pull 200 ma, starting when a cell exceeds 3.2 volts, it won't be doing much at all when the system is charging at say 50 amps. That is just reducing the current to the high cell by 0.4%. If you have one cell that is just 1% down in capacity, it will still be climbing faster than the other 3. That cell will hit 3.2 volts and all charge current will stop. We don't know how far out of balance the pack is, but it won't be getting better. If they were 0.2 volts out of balance, this would be huge. The top cell, which is likely a lower capacity, which is why it charged up faster, has reached the 80% of it's charge capacity, but the other 3 are at only 3.3 volts, which might only be 60% of their capacity. In this setup, you only ever get to use the 80% of the weakest, lowest capacity cell.

Now if the charge controller was set to go from BULK to ABSORB at 14 volts, the current will just start to drop when 14 volts total is reached. If the pack is the same 0.2 volts off balance from the highest to lowest cell, which is very bad, the 3 low cells are all at 3.45 volts when the top cell hits 3.65 volts to reach the 14 volts total. Yes, this is a huge imbalance, but I'm trying to show a worst case here. So the charge voltage will now hold constant and the current will start dropping. That 3.65 volt cell has the balancer loading it to 200 ma, and the charge current is dropping, but still not off. The voltage on the high cell will naturally fall some from the reduced current, and a little more from the balancer load, but the other 3 cells are still climbing. Depending on how low the tail off current is allowed to go, it could drop below the 200 ma of balance current, but in most cases it won't. At some point, the charge current will be down to where the absorb charge just terminates. The longer the absorb lasts, and the lower the current can go, the better the balancer will be able to "Top balance" all of the cells. Even with a short absorb time, the one lower capacity cell might be at it's 95% charge, and the other 3 get to their 79% charge. With a longer absorb, the bottom 3 will creep up more, and the top one will drag down more and get even closer. But your usable capacity get's closer to the good cells 80% and the low capacity cell has to use more of it's capacity to keep up.

If you have higher current active balancing, or much more closely matched cells, then the difference between the 2 setups will get closer. Having a charge controller actually CONTROLLING the charge will make better use of the batteries. Even if you are not targeting a full charge, having it absorb charge at a lower voltage is still good for maintaining balance in the pack. Assuming the balancer can be configured to run below your chosen absorb voltage.