chladekj

New Member

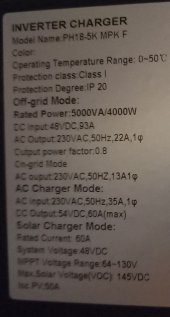

What is better for my LiFePO4 and MUST PV1800 5kW VHM inverter?

Setting:

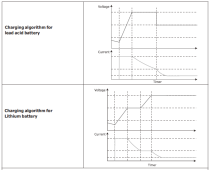

Does anyone know how the Charging algorithm for Lithium battery works at this inverter?

Or what is the difference between the charging algorithm for Lithium and lead acid?

Thanks for your opinion and discussion.

Setting:

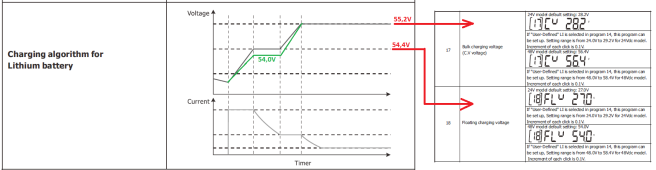

- Battery type Lithium (par. 14 = Li)

- Bulk charging voltage 55,2V (par. 17)

- Float charging voltage 54,4V (par. 18)

- Question: from where the 54,0V goes? I did not find any parameter with this value

Does anyone know how the Charging algorithm for Lithium battery works at this inverter?

Or what is the difference between the charging algorithm for Lithium and lead acid?

- In the handbook, there is only a graph without any explanation